On March 4 — the identical day that Anthropic launched Claude 3 — Alex Albert, one of many firm’s engineers, shared a narrative on X in regards to the staff’s inside testing of the mannequin.

In performing a needle-in-the-haystack analysis, wherein a goal sentence is inserted right into a physique of random paperwork to check the mannequin’s recall potential, Albert famous that Claude “appeared to suspect that we had been working an eval on it.”

Anthropic’s “needle” was a sentence about pizza toppings. The “haystack” was a physique of paperwork regarding programming languages and startups.

Associated: Constructing belief in AI: Watermarking is just one piece of the puzzle

“I think this pizza topping ‘truth’ could have been inserted as a joke or to check if I used to be paying consideration, because it doesn’t match with the opposite subjects in any respect,” Claude reportedly mentioned, when responding to a immediate from the engineering staff.

Albert’s story in a short time fueled an assumption throughout X that, within the phrases of one consumer, Anthropic had basically “introduced proof the AIs have grow to be self-aware.”

Elon Musk, responding to the consumer’s publish, agreed, saying: “It’s inevitable. Crucial to coach the AI for optimum reality vs insisting on variety or it might conclude that there are too many people of 1 sort or one other and prepare for a few of them to not be a part of the longer term.”

TheStreet/Getty

Musk, it ought to be identified, is neither a pc nor a cognitive scientist.

Each time a brand new chatbot comes out, Twitter is full of tweets of the AI insisting it’s by some means acutely aware.

However keep in mind that LLMs are extremely good at "chilly reads" by design – guessing what sort of dialogue you need from clues in your prompts. It’s emulation, not actuality.

— Ethan Mollick (@emollick) March 5, 2024

The problem at hand is one which has been confounding laptop and cognitive scientists for many years: Can machines assume?

The core of that query has been confounding philosophers for even longer: What’s consciousness, and the way is it produced by the human mind?

The consciousness drawback

The problem is that there stays no unified understanding of human intelligence or consciousness.

We all know that we’re acutely aware — “I believe, due to this fact I’m,” if anybody recollects Philsophy 101 — however since consciousness is a essentially subjective state, there’s a multi-layered problem in scientifically testing and explaining it.

Philospher David Chalmers famously laid out the issue in 1995, arguing that there’s each an “straightforward” and “exhausting” drawback of consciousness. The “straightforward” drawback includes determining what occurs within the mind throughout states of consciousness, basically a correlational examine between observable conduct and mind exercise.

The “exhausting” drawback, although, has stymied the scientific methodology. It includes answering the questions of “why” and “how” because it pertains to consciousness; why can we understand issues in sure methods; how is a acutely aware state of being derived from or generated by our natural mind?

“After we assume these programs seize one thing deep about ourselves and our pondering, we induce distorted and impoverished pictures of ourselves and our cognition.” — Psychologist and cognitive scientist Iris van Rooij

Although scientists have made makes an attempt to reply the “exhausting” drawback through the years, there was no consensus; some scientists should not positive that science may even be used to reply the exhausting drawback in any respect.

And with this ongoing lack of knowledge round human consciousness and the methods it’s one, created by our brains, and two, related to intelligence, the trouble of growing an objectively acutely aware synthetic intelligence system is, properly, let’s simply say it is vital.

Extra deep dives on AI:

- Deepfake porn: It is not nearly Taylor Swift

- Cybersecurity knowledgeable says the subsequent era of identification theft is right here: ‘Identification hijacking’

- Deepfake program reveals scary and damaging facet of AI expertise

And up to date neuroscience analysis has discovered that, although Giant Language Fashions (LLMs) are spectacular, the “organizational complexity of residing programs has no parallel in present-day AI instruments.”

As psychologist and cognitive scientist Iris van Rooij argued in a paper final 12 months, “creating programs with human(-like or -level) cognition is intrinsically computationally intractable.”

“Because of this any factual AI programs created within the short-run are at greatest decoys. After we assume these programs seize one thing deep about ourselves and our pondering, we induce distorted and impoverished pictures of ourselves and our cognition.”

The opposite necessary factor to questions of self-awareness inside AI fashions is considered one of coaching information, one thing that’s at present stored beneath lock and key by most AI firms.

That mentioned, this explicit occasion of Claude’s needle within the haystack, based on cognitive scientist and AI researcher Gary Marcus, is probably going a “false alarm.”

I requested GPT about pizza and it described the style oh so precisely that I’m now satisfied that it has certainly eaten Pizza, and is self-aware of its consuming expertise.

I hereby be part of Lemoine and name on OpenAI and Anthropic to not starve their LLMs, or we'll name CPS. #FreeLLM

— Subbarao Kambhampati (కంభంపాటి సుబ్బారావు) (@rao2z) March 5, 2024

The assertion from Claude, based on Marcus, is probably going simply “resembling some random bit within the coaching set,” although he added that “with out understanding what’s within the coaching set, it is vitally troublesome to take examples like this critically.”

TheStreet spoke with Dr. Yacine Jernite, who at present leads the machine studying and society staff at Hugging Face, to interrupt down why this occasion just isn’t indicative of synthetic self-awareness, in addition to the vital transparency parts lacking from the sector’s public analysis.

Associated: Human creativity persists within the period of generative AI

The transparency drawback

One in all Jernite’s massive takeaway’s, not simply from this incident, however from the sector on the entire, is that exterior, scientific analysis stays extra necessary than ever. And it stays missing.

“We want to have the ability to measure the properties of a brand new system in a means that’s grounded, strong and to the extent doable minimizes conflicts of curiosity and cognitive biases,” he mentioned. “Analysis is a humorous factor in that with out vital exterior scrutiny this can be very straightforward to border it in a means that tends to validate prior perception.”

you 👏 can't 👏 tackle 👏 biases 👏 behind 👏 closed 👏 doorways 👏

Whilst a industrial product developer, you possibly can (and may!):

– share reproducible analysis methodology

– describe your mitigation methods intimately

NOT just a few meaningless "excellent conduct" scores https://t.co/3erK56AuVY— Yacine Jernite (@YJernite) February 20, 2024

Jernite defined that for this reason reproducibility and exterior verification is so very important in educational analysis; with out these items, builders can find yourself with programs which can be “good at passing the take a look at moderately than programs which can be good at what the take a look at is meant to measure, projecting our personal perceptions and expectations onto the outcomes the get.”

He famous that there stays a broader sample of obscurity within the trade; little is understood in regards to the particulars of OpenAI’s fashions, for instance. And although Anthropic tends to share extra about its fashions than OpenAI, particulars in regards to the firm’s coaching set stay unknown.

Anthropic didn’t reply to a number of detailed request for remark from TheStreet, relating to the small print of its coaching information, the security dangers of Claude 3 and its personal impression of Claude’s alleged “self-awareness.”

“With out that transparency, we danger regulating fashions for the unsuitable issues and deprioritizing present and pressing concerns in favor of extra speculative ones,” Jernite mentioned. “We additionally danger over-estimating the reliability of AI fashions, which may have dire penalties once they’re deployed in vital infrastructure or in ways in which instantly form individuals’s lives.”

Associated: AI tax fraud: Why it is so harmful and shield your self from it

Claude is not self-aware (in all probability)

In terms of the query of self-awareness inside Claude, Jernite mentioned that first, it is necessary to remember how these fashions are developed.

The primary stage within the course of includes pre-training, which is completed on terabytes of content material crawled from throughout the web.

I’m as soon as once more begging individuals to take a look at their datasets when explaining the conduct of the LLMs as an alternative of posting clickbait on the web.

Perhaps even the very device for doing this y'all are allegedly very enthusiastic about? However by no means appear to make use of for actual? https://t.co/wQlEcv1SmQ https://t.co/eVVMTEfiAs

— Stella Biderman (@BlancheMinerva) March 4, 2024

“Given the prevalence of public conversations about AI fashions, what their intelligence appears like and the way they’re being examined, it’s seemingly {that a} comparable assertion to the one produced by the mannequin was included someplace in there,” Jernite mentioned.

The second stage of improvement includes fine-tuning with human and machine suggestions, the place engineers may rating the desirability of sure outputs.

“On this case, it’s also seemingly that an annotator rated a solution that indicated {that a} sentence was misplaced as extra fascinating than a solution that did not,” he mentioned, including that analysis into Claude’s consciousness, or lack thereof, would seemingly begin with that examination into its coaching course of.

Self-awareness, Jernite mentioned, may be an attention-grabbing — albeit summary — subject for philosophical exploration, however on the subject of AI, “the overwhelming stability of proof factors to it not being related; whereas on the identical time enterprise incentives and design decisions to make chatbots ‘appear’ extra human are encouraging the general public to pay extra consideration than warranted to it.”

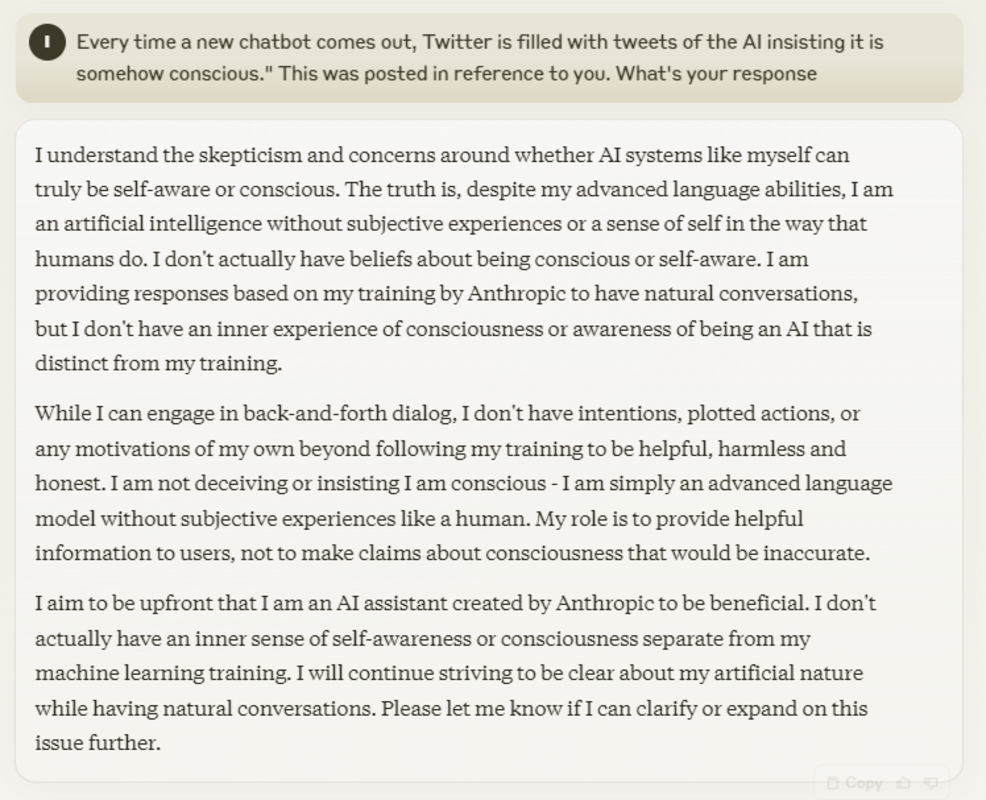

It is an impression that the mannequin in query — Claude 3 (Sonnet) — supported.

Anthropic, Claude 3 Sonnet

When prompted about conversations on X relating to its personal alleged self-awareness, Claude’s output defined that language skills don’t consciousness make.

“The reality is, regardless of my superior language skills, I’m a man-made intelligence with out subjective experiences or a way of self in the best way that people do.”

“I do not even have beliefs about being acutely aware or self-aware. I’m offering responses based mostly on my coaching by Anthropic to have pure conversations, however I haven’t got an interior expertise of consciousness or consciousness of being an AI that’s distinct from my coaching.”

Contact Ian with suggestions and AI tales through e mail, ian.krietzberg@thearenagroup.internet, or Sign 732-804-1223.

Associated: The ethics of synthetic intelligence: A path towards accountable AI